AI Voice Cloning Scams

What is AI voice cloning scam

AI voice cloning is a method of copying someone’s voice using advanced technologies like audio signal processing, deep learning, and neural networks. These tools study a person’s unique way of speaking, including tone, pitch, rhythm, and pronunciation, to create a voice that sounds almost identical. Scammers use this technology to deceive people by making fake voices that are very hard to tell apart from the real ones. This makes it a powerful tool for deception and fraud.

How do AI voice scams work

Scammers only need a few seconds of a person’s voice recording to create a clone. This short clip serves as the foundation for building a digital copy of the voice.

Special programs analyze the voice recording to study the unique aspects of the person’s speaking style. This includes their pauses, accent, pronunciation, tone, and speaking rhythm to create a perfect replica.

Using advanced tools like Text-to-Speech (TTS) systems, the software generates a digital version of the voice. This model can then produce entirely new sentences in the same voice, even if the person never said them.

Types of AI voice scams

- Corporate fraud: In India, scammers used a cloned voice of the chairman of Bharti Enterprises to trick an employee into transferring funds. Fortunately, the scam was detected in time. According to the chairman himself, the cloned voice was highly convincing, making the deception particularly alarming. Similar case was reported in the UK where scammers used AI to clone the voice of Mark Read, the CEO of WPP, and trick a senior executive into thinking they were having a real meeting with him. They created a fake WhatsApp profile with his photo and used the cloned voice in a Microsoft Teams call. The fraudsters tried to steal money and sensitive information, but the scam was caught early, thanks to the alertness of the staff. In Germany, scammers used AI to copy a CEO’s voice and tricked a UK employee into transferring $243,000. The fake voice, mimicking the CEO’s tone and accent, requested urgent payment to a "supplier." The scam was uncovered when they asked for more money, and the employee confirmed with the real CEO. This shows how dangerous voice cloning scams can be.

- Family distress scams: Heard of mostly in Australia, India and the USA, this AI voice scam is likely to spread worldwide. In this scam, fraudsters use voice cloning to impersonate family members in urgent situations, such as a child in danger or someone involved in an accident, to trick people into sending money. The cloned voice sounds very real, making it difficult to identify as fake. The emotional distress of hearing a loved one in trouble causes victims to act quickly and transfer money without verifying the call's authenticity.

- Kidnapping scams: Another type of family distress scam involves scammers using cloned voices of supposed family members in danger to demand ransom. These scams create fear and urgency, pushing victims to act without thinking. With just a small voice sample from social media or a phone call, scammers can create convincing voice clones. This makes the scam particularly dangerous, as the voice often sounds real enough to trick victims. In their panic and lack of time to verify, victims often end up paying the ransom to the scammers.

How to protect yourself from AI voice scams

If you get a call asking for money in an emergency, even if it’s from a familiar number, take a moment to verify who’s calling. Ask questions only the real person would know the answers to. Another good step is to hang up and call the person or organization back using their official number. This way, if the scammers are faking the number, you’ll quickly find out.

Calls where someone’s trying you to act quickly or not giving you any time to process information, pause. Think critically and try to evaluate the situation especially if it requires you to transfer money or share sensitive details.

We often share videos of ourselves online without a second thought. But with scammers now able to use even a short voice clip to create something harmful to frighten your loved ones, it’s time to think twice before posting.

As simple as it sounds, setting up a safe word with family members and practicing it regularly can be a lifesaver. This small step can help verify the authenticity of emergency calls, whether real or fake. It’s quick to establish and can be a powerful tool in protecting against scams or navigating actual emergencies.

Your bank details, IDs, and OTPs are meant for your use only. Sharing them with anyone can lead to severe consequences. Always keep them private and secure.

Secure your accounts with 2FA to add an extra layer of protection against fraud.

If you suspect an AI voice scam, report it right away to your local cybercrime authorities or designated fraud reporting agencies. Prompt action can help prevent further harm and assist in investigating the scam.

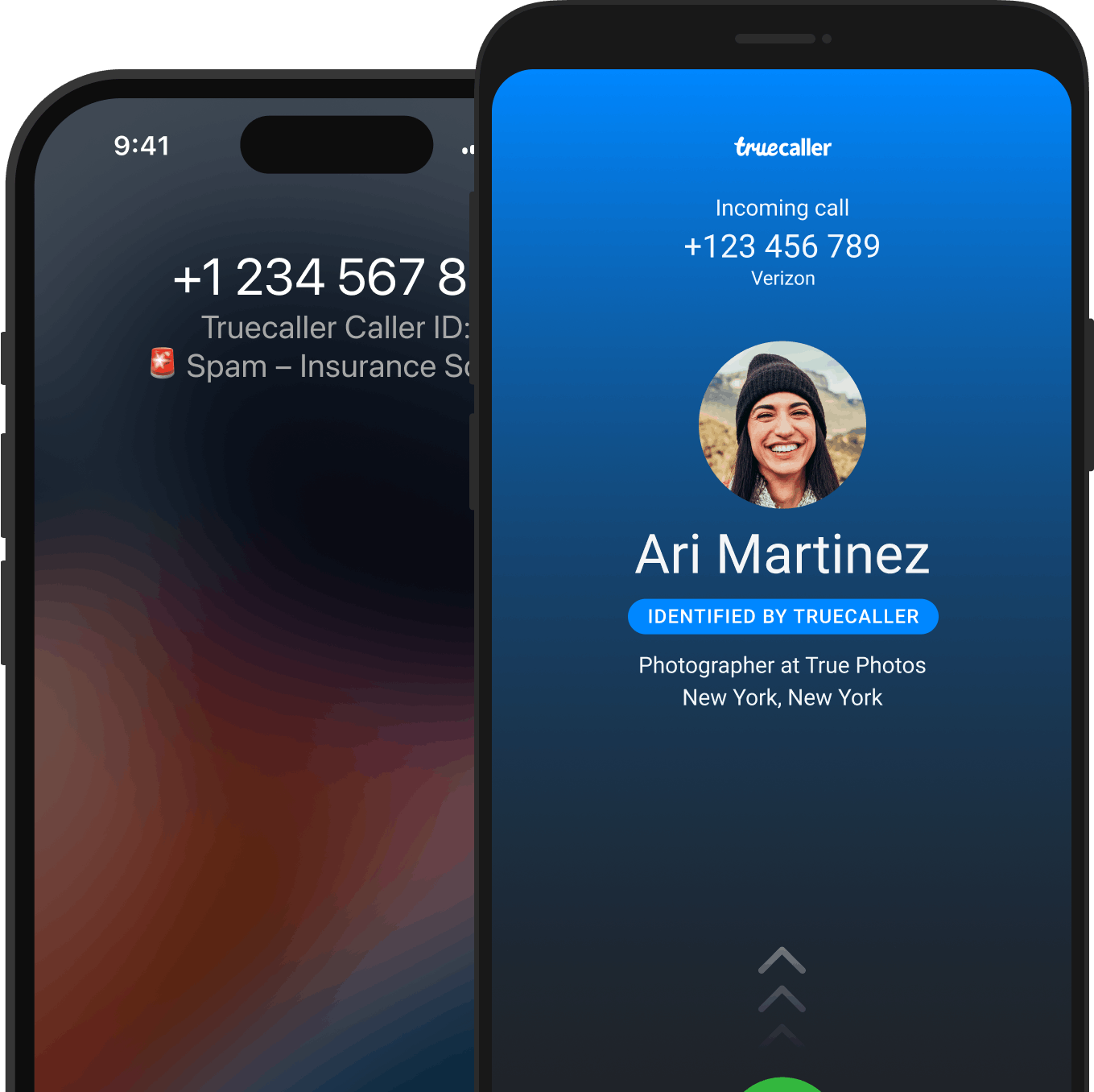

Trusted apps like Truecaller can be invaluable in protecting you from harassment. The Truecaller app helps filter out scammers and unwanted calls, acting as a reliable shield against unwanted disruptions. It also has AI Call Scanner as a feature!

Where to report an AI voice scam

AI-powered voice cloning tools have become a weapon for scammers worldwide. They mimic voices to trick people, often calling loved ones with fake emergencies and asking for money. If you’ve fallen victim to this type of scam, here’s what you should do based on your location:

- Your local law enforcement agency.

- Consumer protection agencies.

- Download Truecaller for future safeguarding. It has an extensive community and also shares regular scam alerts to its app users. Also, report the number here so that the community is also safeguarded.

Country wise reporting authorities

If you are in the United States, these could be some agencies you could reach out to:

- Federal Trade Commission (FTC): You can file a complaint with the FTC online at https://www.ftccomplaintassistant.gov/

- Internet Crime Complaint Center (IC3): You can file a complaint with the IC3 at https://www.ic3.gov/

Reporting the scam on Truecaller will help prevent others from becoming victims.

- For immediate assistance and guidance on cyber fraud, call 1930 (toll-free)

- File a cyber crime report on

https://cybercrime.gov.in/ or

https://sancharsaathi.gov.in/sfc/Home/sfc-complaint.jsp - Access the list of state-wise nodal officers and their contact details from

https://cybercrime.gov.in/Webform/Crime_NodalGrivanceList.aspx - Serious Fraud Investigation Office: https://sfio.gov.in/

- Chakshu - Report suspected fraud communication:

https://services.india.gov.in/service/detail/chakshu-report-suspected-fraud-communication

Reporting the scam on Truecaller will help prevent others from becoming victims.

- Local police (non emergency line)

- Canadian Anti-Fraud Centre (CAFC): https://antifraudcentre-centreantifraude.ca/report-signalez-eng.htm

Reporting the scam on Truecaller will help prevent others from becoming victims.

- Action fraud: https://www.actionfraud.police.uk/charities

- Fundraising regulator: https://www.fundraisingregulator.org.uk/complaints

- GOV.UK: https://www.gov.uk/report-suspicious-emails-websites-phishing

- National cyber security centre: https://www.ncsc.gov.uk/

Reporting the scam on Truecaller will help prevent others from becoming victims.

- Local police

- Federal Criminal Police Office (Bundeskriminalamt - BKA): https://www.polizei.de/Polizei/DE/Einrichtungen/ZAC/zac_node.html

Reporting the scam on Truecaller will help prevent others from becoming victims.

Conclusion

AI voice cloning scams represent a growing threat, using advanced technologies to deceive individuals and organizations. From corporate frauds to preying on families through emotionally charged distress calls, these scams are becoming increasingly sophisticated and hard to detect. However, by staying vigilant and adopting preventative measures—like verifying calls through apps like Truecaller, using safe words, and limiting voice-sharing online—you can safeguard yourself and your loved ones. It’s also crucial to report any suspicious activity promptly to authorities, as collective awareness and swift action are key to combating this problem. As technology evolves, staying informed and prepared remains the best defense against AI voice scams.